AI Blind Spot

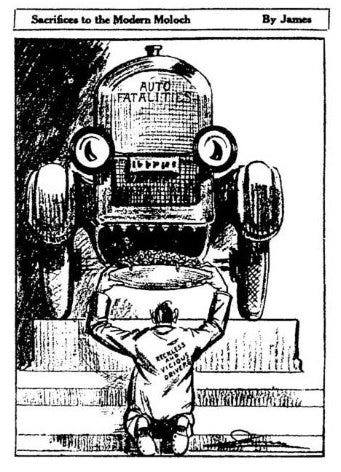

History repeats itself and nowhere is it truer than in the field of technology. For instance, it took 26 years from the invention of the automobile in 1886 to the implementation of the first traffic light in 1912. Safety measures like traffic regulations, seatbelts, and road infrastructure typically emerge only after we experience the negative consequences of a new technology.

Regulating AI appears to be no exception to this pattern.

Consider Apple's recent partnership with OpenAI to integrate AI features into its upcoming iOS 18 update. This strategic move, while potentially lucrative for Apple, has been met with skepticism. Elon Musk, for instance, has expressed concerns about the privacy and security implications of this partnership. While Apple and OpenAI have stated that users will have the option to opt out of AI features, the specifics remain unclear.

This lack of transparency is concerning, especially given the findings of a recent study by Lee et al.,(2024) which revealed that in 93% of cases, AI either exacerbated or created new privacy risks, such as deepfakes.

While governments worldwide are attempting to regulate AI, many proposed regulations overlook a crucial aspect: the human factor.

Regulatory Challenges: Missing Human Factor

The European Union's AI Act, particularly Article 5, represents a significant step towards responsible AI governance. However, its effectiveness hinges on acknowledging a crucial element often overlooked in technical evaluations: the human factor.

A recent study by Zhong et al. highlights this critical gap, arguing that Article 5's focus on the "intention" of AI developers is insufficient. Humans, even with the best intentions, are prone to cognitive biases that can unintentionally lead to the creation of manipulative AI systems. Furthermore, the study emphasizes the subtle ways AI can be used for manipulation, employing both 'subliminal techniques' and techniques that directly exploit cognitive biases. These methods, often operating below conscious awareness, further highlight the need for AI regulations to move beyond the developer's intent and consider the actual impact on individual behaviour.

Subliminal Techniques

Operating beneath the threshold of conscious awareness, subliminal techniques can influence behaviour without individuals even realizing they are being targeted. The study identified three key methods AI systems might utilize:

Tachistoscopic Presentation: Information is flashed so briefly that it bypasses conscious processing, yet still registers subconsciously.

Masked Stimulus: Stimuli are concealed, preventing conscious recognition while still impacting decision-making.

Conceptual Priming: Specific concepts or ideas are subtly activated in individuals' minds, influencing subsequent behaviour without their knowledge.

Manipulative Techniques:

Unlike subliminal techniques, manipulative techniques distort the decision-making process itself, potentially leading individuals towards choices that do not serve their best interests. The study highlighted five such techniques AI systems could leverage:

Representativeness Heuristic: Exploiting stereotypes and biases, AI could present information in a way that feels familiar, even if it's misleading.

Availability Heuristic: By selectively presenting readily available information, AI could skew perceptions and influence decisions.

Anchoring Effect: AI could leverage the human tendency to fixate on initial information, using it as an anchor to sway subsequent judgments.

Status Quo Bias: AI could exploit the preference for the familiar, nudging individuals towards maintaining existing states even when change would be beneficial.

Social Conformity: By highlighting perceived group norms or societal expectations, AI could pressure individuals into conforming, even against their better judgment.

Current AI regulations, while a necessary first step, expose a critical gap in our understanding of how AI systems influence human behaviour. Emerging research reveals tangible impacts, such as writers accepting lower pay when aided by AI, highlighting the need to move beyond technical evaluations and consider the subtle ways AI shapes human actions. Furthermore, the persistence of biases in AI outputs, even in models trained on massive datasets, highlights the complexity of this challenge.

Adding to these concerns, employees at leading AI companies, including OpenAI and Google DeepMind, are raising the alarm about the potential risks of advanced AI. They are advocating for a "right to warn" the public without fear of retaliation, citing insufficient safeguards and the potential for harm. These individuals, including prominent figures in the AI community, argue that traditional whistleblower protections are inadequate for addressing the unique challenges posed by rapidly evolving AI technologies. They propose a shift in industry incentives, prioritizing safety over profit and ensuring that companies are held accountable for fulfilling their public commitments to responsible AI development.

As we navigate this uncharted territory, the decades-long struggle to ensure road safety offers a sobering lesson: developing effective AI governance requires acknowledging the limitations of our current understanding, embracing ongoing research and adaptation, and, most importantly, learning from the mistakes of our technological past.

Behavioural RoundUp

Insurance Helped 46,000 Indian Women Avoid Deadly Work During Heat Waves

In Ahmedabad, India, an innovative insurance program has provided critical relief to 46,000 women labourers during scorching heat waves. Operated by ICICI Lombard and managed by the Self-Employed Women’s Association (SEWA), the program offers parametric insurance payouts when temperatures surpass 43.6°C, aiding women like Lataben Arvindbhai Makwana. These payments, totalling $340,000 last month, alleviate the financial strain caused by lost wages due to extreme heat, enabling essentials like food and medication. Supported by Climate Resilience for All, the initiative aims to expand, potentially covering SEWA’s 2.9 million members, demonstrating a novel approach to climate resilience and gender equality in vulnerable communities. Read more at Bloomberg.

Cities know how to improve traffic. They keep making the same colossal mistake.

New York City’s congestion pricing plan aimed to reduce traffic and pollution while funding public transportation, but was postponed by Governor Hochul due to economic concerns. The decision underscores a broader urban planning issue: cities often prioritize drivers over residents, negatively impacting livability and transit efficiency. Congestion pricing, proven effective in cities like London and Stockholm, would have discouraged driving and generated revenue for transit improvements. From a behavioural economics perspective, the delay reflects a bias towards short-term economic fears rather than long-term benefits and public welfare, perpetuating a cycle of unsustainable urban development. Read more at Vox.

Liz Taylor and the Law of Art

In 1963, Liz Taylor acquired a van Gogh at auction, which was later claimed by the original owner's heirs as Nazi loot. Taylor won the lawsuit due to statute of limitations and good faith and sold the painting for $16 million in 2012. This case, along with the other examples discussed in Ventoruzzo's book “Il van Gogh di Liz Taylor”, raises interesting questions about intellectual property and behavioural ethics in the art world. This legal thriller reveals the art world's complexities, answering questions such as: What defines art under the law? How do auctions and art markets operate? What happens in cases of theft and forgery? It also examines how art's role evolves from celebrating power to challenging norms, making it a captivating read for anyone curious about art's legal and cultural dimensions. Read more here.